System considerations

This reference architecture uses four (04) server nodes (commodity hardware). The server configuration is represented as:

| HPC Head Node (2 processors Intel® Intel Xeon Processor E5-2680 v3 / 64GB RAM / 2x Integrated 1 GbE ports / 1TB Hard Drive SATA 3.5" 7.2K) |

|---|

| Node01 (2 processors Intel® Intel Xeon Processor E5-2680 v3 / 64GB RAM / 2x Integrated 1 GbE ports ) |

| Node02 (2 processors Intel® Intel Xeon Processor E5-2680 v3 / 64GB RAM / 2x Integrated 1 GbE ports ) |

| Node03 (2 processors Intel® Intel Xeon Processor E5-2680 v3 / 64GB RAM / 2x Integrated 1 GbE ports ) |

As you can notice on the table shown, only the HPC Head Node has a Hard drive installed, this is because the Operating system provisioning to the nodes is stateless, this means that the system is provisioned on Memory. the storage is managed on the head node, this is not the recommended practice but for training purposes is should work. Hardware recommendations are going to be share on next sections.

2.1. Network Connectivity

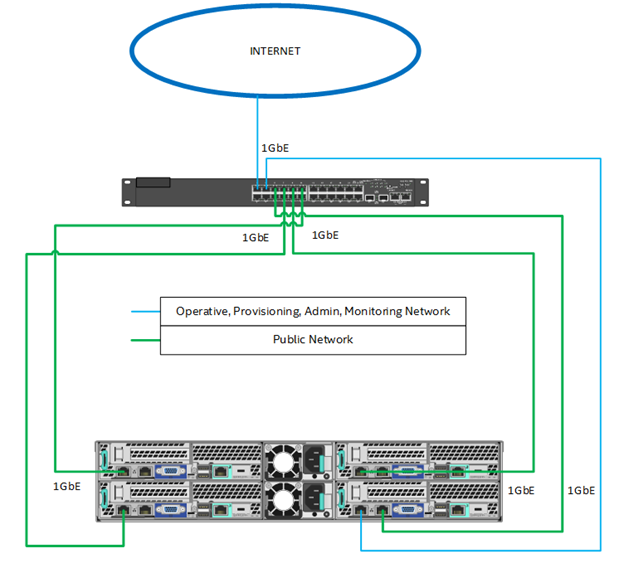

For the configuration there are configured the following networks:

- Operative Network: this network is used for moving the actual workload on the cluster. Usually Ethernet (25GbE/100GbE) on this case it is configured with 1GbE and the same port is shared with other networks.

- Provisioning / Admin: usually 1GbE, used to provision the Operating system and to execute remote commands. In the present configuration is shared with the operative network.

- Public Network: only the head node use this networks, it is used for internet access and external connection. Port labeled as 1 on the Head Node.

- Monitoring: shared with the Operative network.

Note: the recommended networking option for Operative network is Intel® Omnipath or infiniband. The configuration of Intel® Omnipath is out of the scope for this document. You can find the installation guide for Omnipath software here: https://www.intel.com/content/dam/support/us/en/documents/network-and-i-o/fabric-products/Intel_OP_Fabric_Software_IG_H76467_v5_0.pdf.

In the following diagram it is shown the network architecture:

As you can see in the last figure, only the controller node has internet access, the rest of the nodes does not need external connection. It is important to express that we are moving the traffic through only one port, this is not recommended on production environments.

2.2. Storage Considerations

When implementing high performance computing on production environments, typically there are two types of data storage:

User storage / Home: usually NFS (Network File system) that is a distributed file system with a moderate read/write performance. It is designed to hold the user data that is not being used during an actual HPC operation.In a lab cluster, you can enable NFS mounting of a $HOME file system and the public OpenHPC install path (/opt/ohpc/pub).

Application storage / Scratch: Tends to be a Parallel File System(PFS) like Lustre. PFSs are designed to support a high and parallel read/write performance and it’s designed to hold the application data that is being used during an actual HPC operation.

For this storage a high performance, lossless network needs to be considered, think it as having a second cluster just for storage.

Note: for the current HPC Cluster, we are using NFS for bot user and application storage. the files are going to be saved on the HPC Head node directories.

Expanding the information about parallel file system, we can give an example with Lustre. The Lustre file system is a parallel file system used in a wide range of HPC environments, small to large, such as oil and gas, seismic processing, the movie industry, and scientific research to address a common problem they all have and that is the ever increasing large amounts of data being created and needing to be processed in a timely manner. In fact it is the most widely used file system by the world’s Top 500 HPC sites.

Storage and performance capacity can be increased by adding more nodes to the system.

Some of the characteristics of the Lustre File System are:

| Storage System Requirements | Lustre File System Capabilities |

|---|---|

| File System Max | 512PB per file system |

| Larger file | 32PB max per file |

| High Troughput | 2TB/s in a production system |

| Amount of files | Up to 10 million files per directory, total to 2 billion per file system. Maximum amount of concurrent users 25000. |

| High Availability | Automated failover |

The configuration of a Lustre cluster is out of the scope of this document, as especified before the system will be configured using NFS on the head node.

2.3. OpenHPC Installation Guide

- Before starting look at OpenHPC webpage for the latest version information. http://openhpc.community

- Move to the download section

- Look for the install recipe that apply to your requirements, on this case this document is based on Centos 7.3 recipe for Warewulf + SLURM.

Note: The present document is based on OpenHPC 1.3 (31 March 2017)

2.4 Operating System considerations

- Install CentOS version 7.3 x86_64 on the Head Node.

- Profile configured on the installer are: infrastructure server with development tools and remote management.

- Use hard drive default layout.

- Configure eth0 port for internet access and eth1 port for cluster administration and provisioning (use this naming convention or the configured on your operating system).

- Configure root password

- Create an User and put it on the sudoers.

2.5. General recommendations

- Take note of the MAC Address on eth0 port of each compute node. You can see this information on the BIOS.

Here is a table that contains the information you may need, this layout must be decided before starting the installation:

| Node Name | Characteristic | Description | Value |

|---|---|---|---|

| Node01 | Interface eth0 | MAC Address | xx:xx:xx:xx:xx:xx |

| Interface eth1 | MAC Address | xx:xx:xx:xx:xx:xx | |

| Hardware | Processor | Intel Xeon E5-2680V3 2.5GHz(02) | |

| Hardware | RAM | 64GB | |

| Hardware | Hard Drive | N/A | |

| Hardware | Network interface | Intel I350 Dual port Gigabit |

- Document the networking mapping and port assignment from the servers to the switches.

- It is recommended to update your software with yum -y update, before starting the installation.

- At the beginning you only need to worry about the head node configuration, once this node is correctly configured you can take of the compute nodes.

- Keep track of all the commands you execute on the server and document the results, this will help during troubleshooting process.